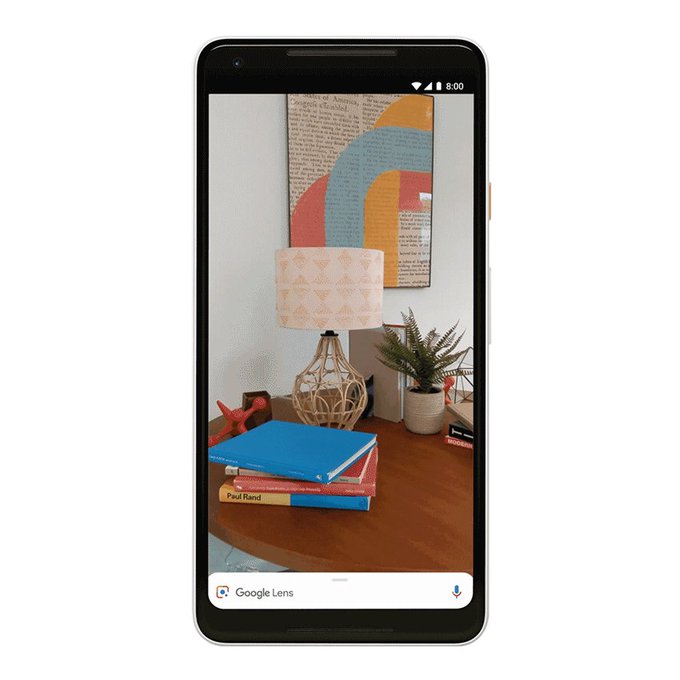

As part of the latest update, Google Lens is going to be

built into 10 different Android devices’ native cameras, including Google Pixel

2 and LG 7 ThinQ devices, so you don’t have to open a separate app. There will

also be a real-time finder that will analyze what your camera sees even before

you press click. If you point your camera at a poster of a musician, Lens can

also start playing a music video.

You open up any camera app, and Google Lens will tell you

what’s in the image. The image recognition tool can give users more information

about things like books, buildings, and works of art. How it works is that you

take a photo, and the tool will process the pixels through machine learning to

provide more details and also provide relevant search tags.

Google has more of an eye on retail with this new update to

Lens: instead of just identifying clothes, it will also provide you with

shopping links on occasion, if it recognizes the brand or style. Lens can also

recognize words now, so you can copy and paste from the real world into your

phone, which is a similar functionality that Google Translate Image already

has.

There’s also a crossover between Google Lens and Maps this

time around, which will add AR to Street View and help you navigate in real

time.

The 10 Android devices that are getting Google Lens inside

their camera apps are: LGE, Motorola, Xiaomi, Sony Mobile, HMD/Nokia,

Transsion, TCL, OnePlus, BQ, Asus, and the Google Pixel.

The feature, dubbed smart text selection, lets you copy text

from a physical document, then using it as you would any other bit of copied

text. In a Google blog post,

Comments

Post a Comment